AGI Alignment Experiments: Foundation vs INSTRUCT, various Agent

Por um escritor misterioso

Descrição

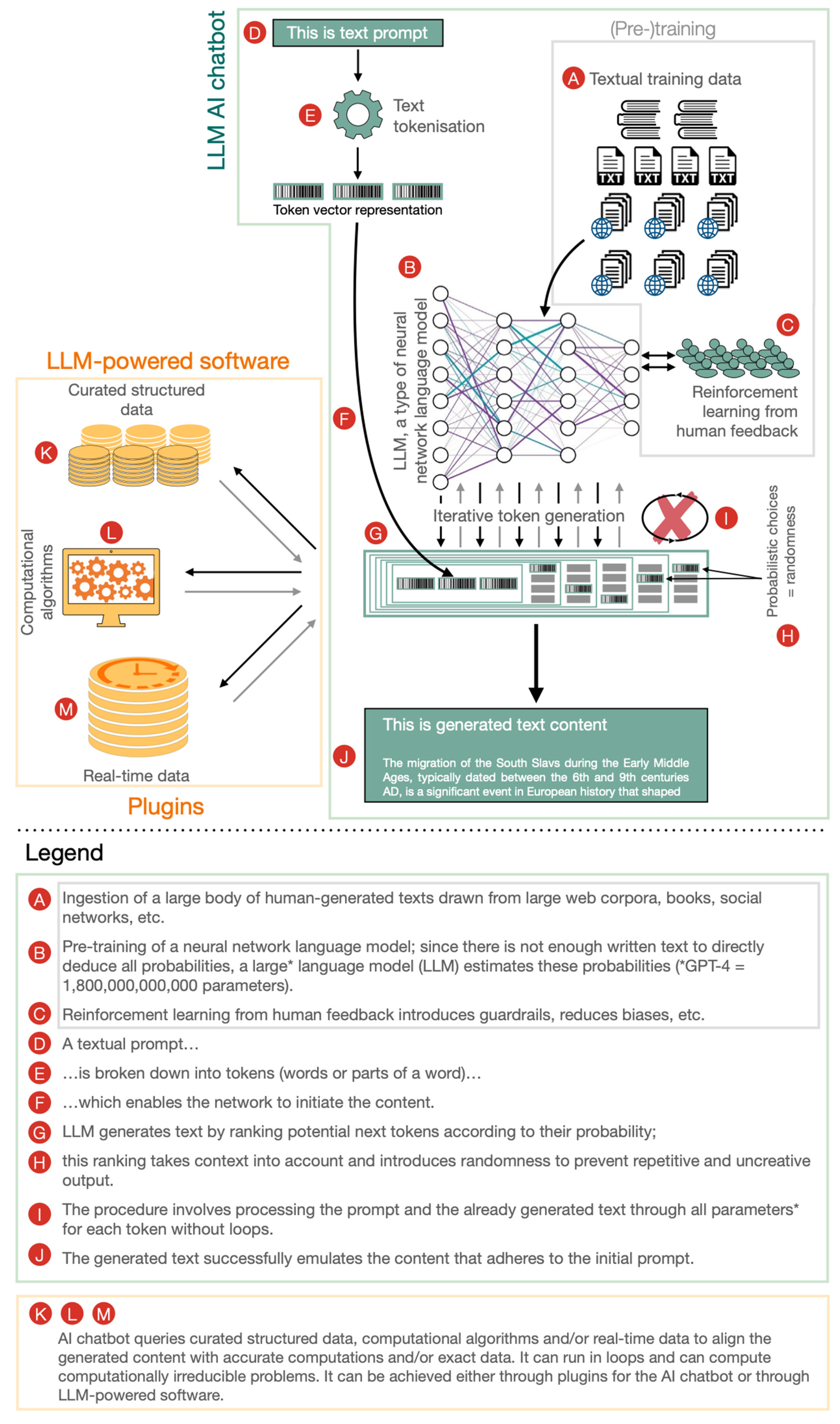

Here’s the companion video: Here’s the GitHub repo with data and code: Here’s the writeup: Recursive Self Referential Reasoning This experiment is meant to demonstrate the concept of “recursive, self-referential reasoning” whereby a Large Language Model (LLM) is given an “agent model” (a natural language defined identity) and its thought process is evaluated in a long-term simulation environment. Here is an example of an agent model. This one tests the Core Objective Function

AGI Alignment Experiments: Foundation vs INSTRUCT, various Agent Models - Community - OpenAI Developer Forum

What Does It Mean to Align AI With Human Values?

leewayhertz.com-Auto-GPT Unleashing the power of autonomous AI agents.pdf

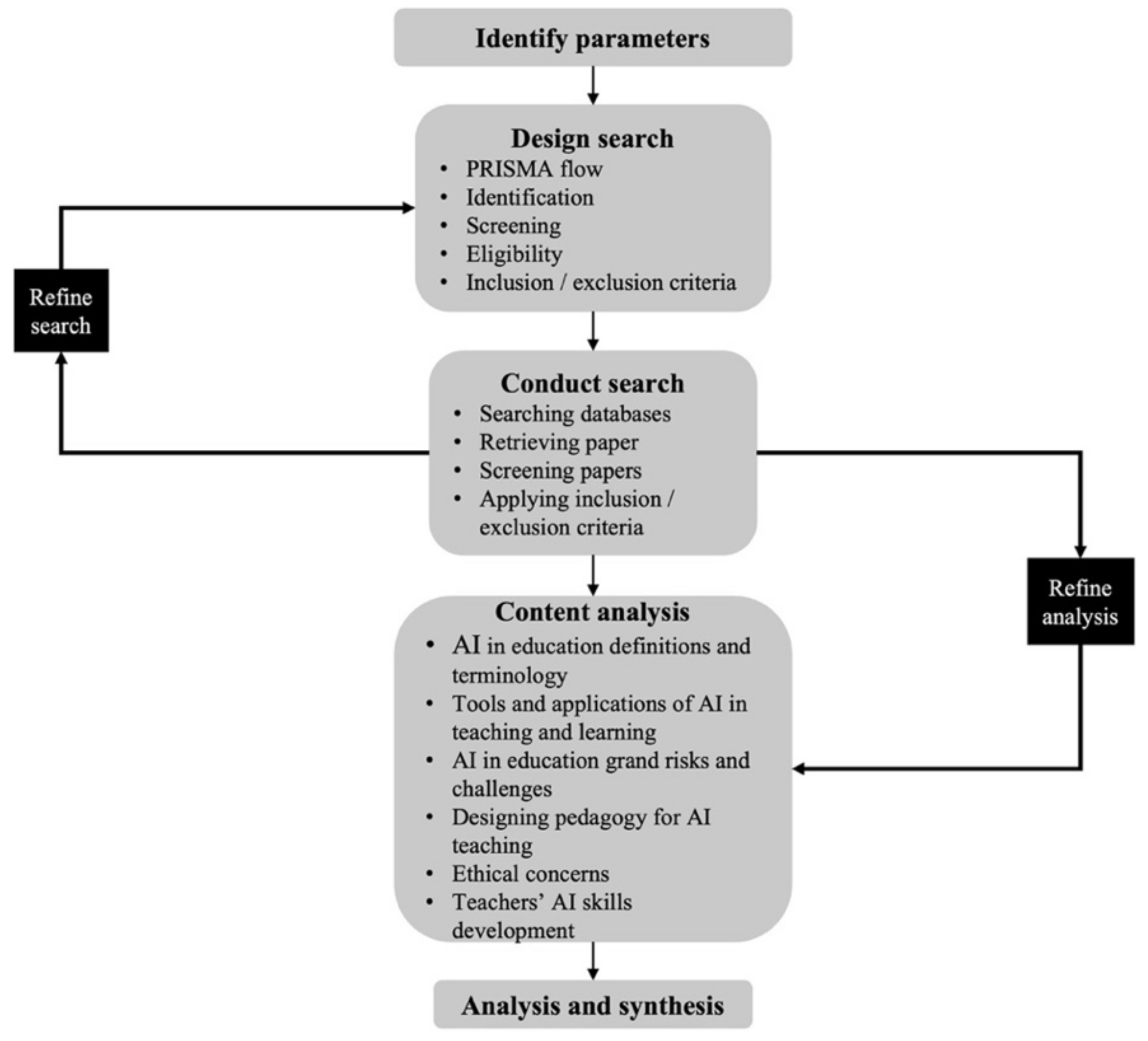

Future Internet, Free Full-Text

AgentVerse: Facilitating Multi-Agent Collaboration and Exploring Emergent Behaviors in Agents – arXiv Vanity

Auto-GPT: Unleashing the power of autonomous AI agents

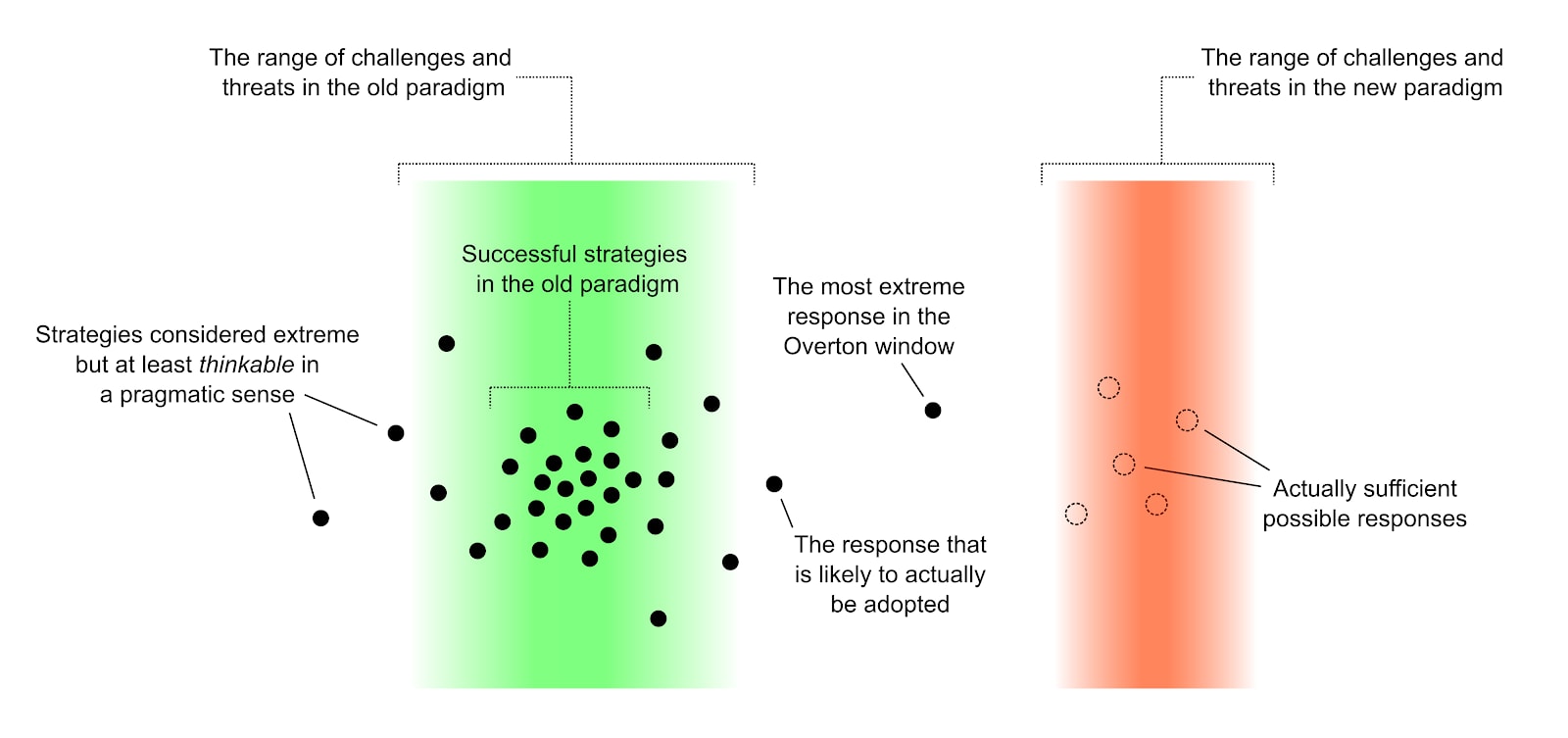

Six Dimensions of Operational Adequacy in AGI Projects — AI Alignment Forum

My understanding of) What Everyone in Technical Alignment is Doing and Why — AI Alignment Forum

Florence-2: Revolutionizing AI with Multi-Task Vision Models

Information, Free Full-Text

de

por adulto (o preço varia de acordo com o tamanho do grupo)